Viral videos abound with humanoid robots performing amazing feats of acrobatics and dance but finding videos of a humanoid robot performing a common household task or traversing a new multi-terrain environment easily, and without human control, are much rarer. This is because training humanoid robots to perform these seemingly simple functions involves the need for simulation training data that lack the complex dynamics and degrees of freedom of motion that are inherent in humanoid robots.

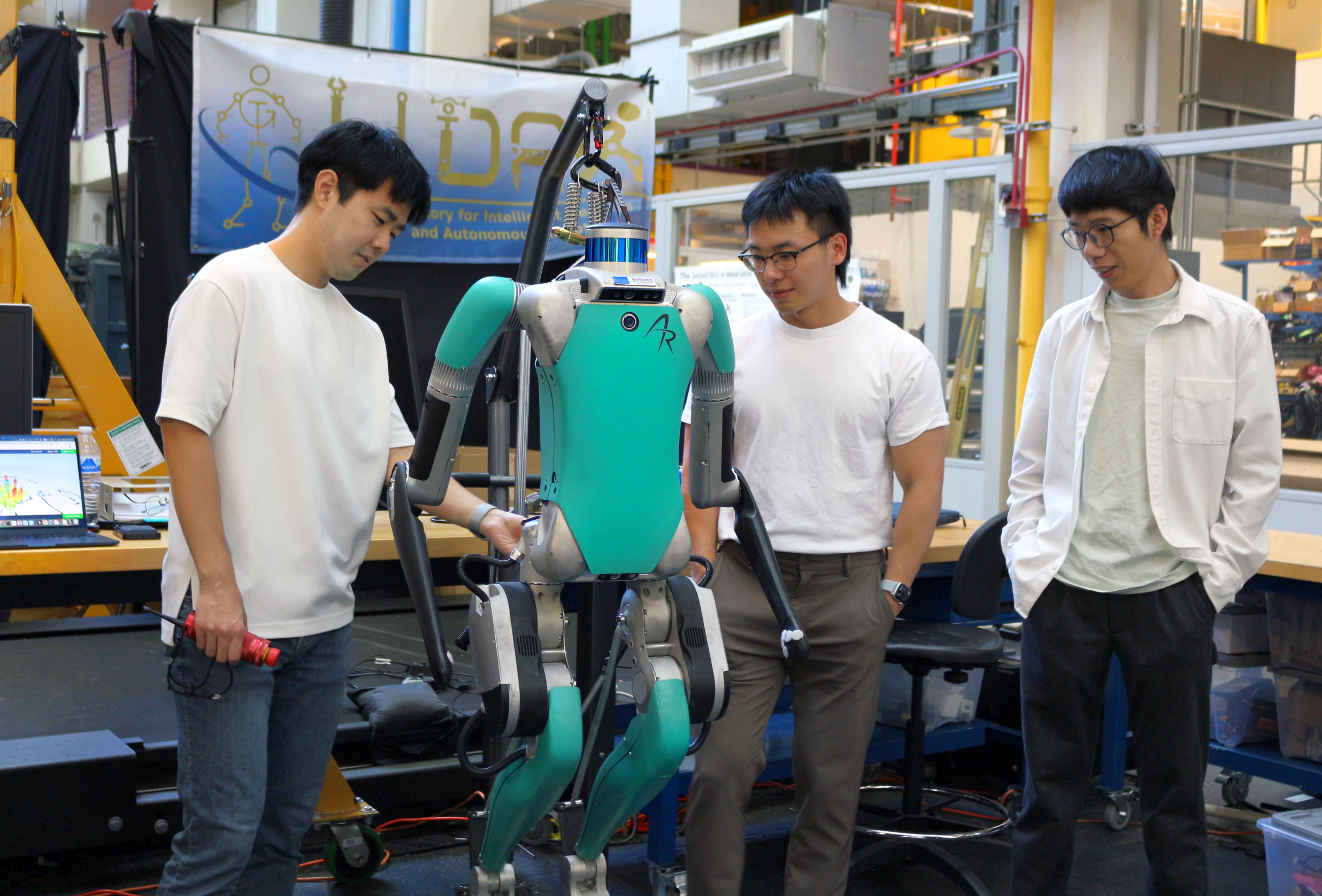

To achieve better training outcomes with faster deployment results, Fukang Liu and Feiyang Wu, graduate students under Professor Ye Zhao from the Woodruff School of Mechanical Engineering and faculty member of the Institute for Robotics and Intelligent Machines, have published a duo of papers in IEEE Robotics and Automation Letters. This is a collaborative work with three other IRIM affiliated faculties, Profs. Danfei Xu, Yue Chen, and Sehoon Ha, as well as Prof. Anqi Wu from School of Computational Science and Engineering.

To develop more reliable motion learning for humanoid robots and enable humanoid robots to perform complex whole-body movements in the real world, Fukang led a team and developed Opt2Skill, a hybrid robot learning framework that combines model-based trajectory optimization with reinforcement learning. Their framework integrates dynamics and contacts into the trajectory planning process and generates high-quality, dynamically feasible datasets, which result in more reliable motion learning for humanoid robots and improved position tracking and task success rates. This approach shows a promising way to augment the performance and generalization of humanoid RL policies using dynamically feasible motion datasets. Incorporating torque data also improved motion stability and force tracking in contact-rich scenarios, demonstrating that torque information plays a key role in learning physically consistent and contact-rich humanoid behaviors.

While other datasets, such as inverse kinematics or human demonstrations, are valuable, they don’t always capture the dynamics needed for reliable whole-body humanoid control.” said by Fukang Liu. “With our Opt2Skill framework, we combine trajectory optimization with reinforcement learning to generate and leverage high-quality, dynamically feasible motion data. This integrated approach gives robots a richer and more physically grounded training process, enabling them to learn these complex tasks more reliably and safely for real-world deployment. - Fukang Liu

In another line of humanoid research, Feiyang established a one-stage training framework that allows humanoid robots to learn locomotion more efficiently and with greater environmental adaptability. Their framework, Learn-to-Teach (L2T), unlike traditional two-stage “teacher-student” approaches, which first train an expert in simulation and then retrain a limited-perception student, teaches both simultaneously, sharing knowledge and experiences in real time. The result of this two-way training is a 50% reduction in training data and time, while maintaining or surpassing state-of-the-art performance in humanoid locomotion. The lightweight policy learned through this process enables the lab’s humanoid robot to traverse more than a dozen real-world terrains—grass, gravel, sand, stairs, and slopes—without retraining or depth sensors.

By training an expert and a deployable controller together, we can turn rich simulation feedback into a lightweight policy that runs on real hardware, letting our humanoid adapt to uneven, unstructured terrain with far less data and hand-tuning than traditional methods. - Feiyang Wu

By the application of these training processes, the team hopes to speed the development of deployable humanoid robots for home use, manufacturing, defense, and search and rescue assistance in dangerous environments. These methods also support advances in embodied intelligence, enabling robots to learn richer, more context-aware behaviors.Additionally, the training data process can be applied to research to improve the functionality and adaptability of human assistive devices for medical and therapeutic uses.

As humanoid robots move from controlled labs into messy, unpredictable real-world environments, the key is developing embodied intelligence—the ability for robots to sense, adapt, and act through their physical bodies,” said Professor Ye Zhao. “The innovations from our students push us closer to robots that can learn robust skills, navigate diverse terrains, and ultimately operate safely and reliably alongside people. - Prof. Ye Zhao

Author - Christa M. Ernst

Citations

Liu F, Gu Z, Cai Y, Zhou Z, Jung H, Jang J, Zhao S, Ha S, Chen Y, Xu D, Zhao Y. Opt2skill: Imitating dynamically-feasible whole-body trajectories for versatile humanoid loco-manipulation. IEEE Robotics and Automation Letters. 2025 Oct 13.

Wu F, Nal X, Jang J, Zhu W, Gu Z, Wu A, Zhao Y. Learn to teach: Sample-efficient privileged learning for humanoid locomotion over real-world uneven terrain. IEEE Robotics and Automation Letters. 2025 Jul 23.