Download this episode

ScienceMatters’ inaugural episode takes us back to the first day of the 2017-18 school year. As Georgia Tech welcomed students with a solar-eclipse-viewing, campus-wide party, School of Psychology researchers recreated the eclipse experience for a blind man by giving voice to data in real time.

[Music]

- Renay San Miguel:

-

"Hello, I’m Renay San Miguel, and this is ScienceMatters, the podcast from the Georgia Tech College of Sciences. [Applause and cheering]"

- Renay San Miguel:

-

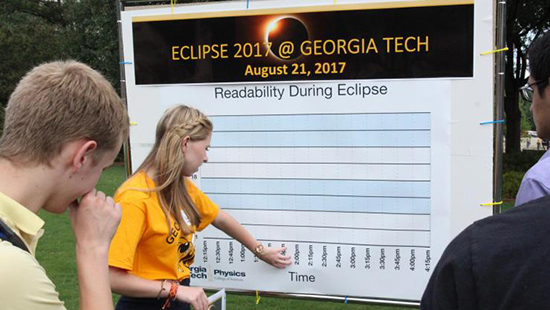

"August 21, 2017. The first day of fall classes at Georgia Tech comes complete with a solar eclipse — and a Tech Green eclipse watch party. Thousands enjoy an afternoon leading up to the big celestial event."

Georgia Tech Student:

Georgia Tech Student:-

"I will always remember this, because I saw the eclipse and started college on the same day at the best university."

- Renay San Miguel:

-

"But as that’s going on in midtown Atlanta…"

- James Boehm:

-

"I look forward to being able to appreciate and see the eclipse like everyone else does."

- Renay San Miguel:

-

"…James Boehm of Nashville, blind since he was 13 years old, travels with his wife to Hopkinsville, Kentucky, which is in the eclipse’s path of totality. Boehm is taking part in an AT&T experiment called Eclipse: Impossible. The company, with a big assist from Georgia Tech, will try to recreate the eclipse-watching experience for him—complete with an otherworldly soundtrack. Boehm wears special AT&T Aira glasses equipped with speakers, a camera, and a wireless connection. A person serving as an Aira guide sees what Boehm’s camera is showing, and he or she describes to Boehm the scene and people around him, and the sky above him. He’s also hearing a special soundtrack composed by Georgia Tech’s Sonification Lab, based in the School of Psychology. The lab’s researchers use sounds and music to give voice to data coming from the eclipse—all in real-time. [Eclipse sounds]"

- Renay San Miguel:

-

"The soundtrack changes as the sun starts to hide behind the moon… [Eclipse sounds]"

- Renay San Miguel:

-

"…as the temperature drops… [Eclipse sounds]"

Renay San Miguel:

Renay San Miguel:-

"…and as the corona explodes around the eclipsed sun. [Eclipse sounds]"

- Renay San Miguel:

-

"Again, here’s James Boehm."

- James Boehm:

-

"People think something like an eclipse, that would be impossible for someone who is blind to see everything that was going on around us, and it is something that will stick with us for the rest of our lives."

- Renay San Miguel:

-

"The eclipse soundtrack is one example of Sonification Labs’ interdisciplinary research. Under the direction of School of Psychology Professor Bruce Walker, the lab studies sound as a way to represent data. It also studies human-computer interaction through non-traditional interfaces. That’s a fancy way of saying it researches trendy voice recognition technology like Apple’s Siri and Amazon’s Alexa. [MUSIC]"

- Renay San Miguel:

-

"You’ve probably seen photos of Saturn’s rings. But have you ever heard them? [Saturn’s rings sounds]"

- Renay San Miguel:

-

"You know that the surface of Mercury is nice and toasty. But have you heard how hot it is there? [Mercury surface sounds] "

- Renay San Miguel:

-

"Those are just two samples of the 2017 Solar System Sonification research study done by Walker’s lab. Just as different sounds were assigned to different stages of the solar eclipse, certain sounds are attached to certain characteristics of the planets, such as mass, gravitational pull, temperature, length of day. But just how are those sounds assigned? Here’s Bruce Walker:"

- Bruce Walker:

-

"Well the first thing we’re trying to figure out is, what is the communicative intent? That is, what are we trying to get across, what’s the message? And once we know that message, then we can figure out how to take some numbers, some data, some values and represent them through sound.

So sonification is generally using non-speech sounds, and we have to figure out, if I increase the pitch for the sound, for example, does that mean the value that I’m representing is also going up? So..bom bom bom BOM BOM, is it getting hotter or is it getting colder? [Gravity sounds]"

Bruce Walker:

Bruce Walker:-

"In many cases we’ll do a simple sonification, so you’ll think the temperature of Jupiter is different from the temperature of Mercury, so we would have different pitches, for example, to represent those different temperatures. However, you can get a little bit more playful, a little bit more creative and have other ways of representing attributes of a planet. One thing we tried to represent was gravity. What’s the amount of gravity or the gravitational pull on Jupiter vs. on the moon. [Jupiter gravity sounds]"

- Bruce Walker:

-

"What we chose to do was to have a sound that seemed like a ball was dropped out of your hand, hit the ground, and bounced, so if you have a tennis ball and dropped it and it goes plunk plunk plunk, on a planet with less gravity the ball would bounce higher and the sound would be different. [Jupiter gravity sounds]"

- Renay San Miguel:

-

"Why attempt sonification in the first place? For Walker, it ties into the first of what he calls the four pillars of his lab’s research."

- Bruce Walker:

-

"The first pillar is formal education, school. How can we use sound, multimedia, multimodal user interfaces to make school more engaging, more rich, more effective, but also more accessible. So there are plenty of blind and visually impaired students for example who have real struggles with the graphs and charts and the equations of our visually dominated school system.

So how can we work on projects to add audio, to make auditory graphics, to make auditory equations, to make auditory charts using sound in all those way to convey what’s happening in their educational world.

The second pillar is what we call informal education, the place where you learn something but not in the school. Field trips, museums, aquariums, science centers, all the places your teacher might take you in a yellow bus if you’re lucky. How can we make going to the aquarium engaging, enriching, educational, entertaining, and accessible.

How can I convey to someone who can’t see that what’s going on, not just the factual information but the visceral wonder of nature, ooh, aah. When the whale shark swims into view lumbering along, giant of the ocean, it’s a sight that gives you goosebumps. Can’t see it, how can I give you goosebumps? I’m going to give you goosebumps through sound. [Music]"

Bruce Walker:

Bruce Walker:-

"The third pillar is what we call electronic devices. That’s kind of a large category, and all of these other things from Siri to your thermostat. Can you talk to your Nest thermostat? How does your Apple watch vibrate? What is your communication that you have to and from that? What are gesture controls that you can use to control something? How do you talk to your television? All of those kinds of electronic devices, how do we use a multimodal input and output, plus all of these sociological and psychological, cultural factors to make those technologies more enjoyable, more effective, more acceptable, and so on. The fourth pillar of our research is driving, all the things that are related to driving."

- Waymo video:

-

"Back in 2009, in our early days at Google, we started working on self-driving cars. Today, we’re called Waymo, and our fully self-driving cars are on the road."

- Renay San Miguel:

-

"That’s from a Google video highlighting its Waymo self-driving auto technology. The Sonification Lab studies what might happen psychologically and socially to people using new technologies such as self-driving cars."

- Bruce Walker:

-

"So if you are in a car, and you want it to start driving, you want to hand over control to the car. How does that happen? What is the interface? Do you say, ‘hey, car, your turn?’ Or do you press a button? What if you accidentally press the button? Should we have some kind of a more sophisticated, two-button press that’s required, or secret handshake? What is the way you communicate in no uncertain way, hey, car, you take the wheel."

- Renay San Miguel:

-

"Here’s another Waymo video which shows a couple being chauffeured around in a driverless car:"

- Waymo video:

-

"[Laughter]

Thank you, car.

[Laughter ]

Yeah, thank you, car."

- Bruce Walker:

-

"Part of the work we do in the lab is in the driving world, and when you’re developing an automated car, the car — certainly the technology aspects of the sensors, and how does the car know where it is, and how does it know or estimate what the other cars are going to do. And all of that technology and engineering stuff is fascinating, but what we’re mostly interested in is how do people interact with that technology?

I will say that in our lab we are mostly interested in what happens inside the car, so we don’t build cars. My lab is not an engineering group, we’re talking about how does the human perform the driving tasks, and how does the human interact with the car in way that are not related to driving?

[Music]

[Eclipse sounds]"

- Renay San Miguel:

-

"We’ve told you how Walker’s lab researchers study our reactions to new voice-driven technologies, whether in our cars, on our smartphones, or in our homes. His researchers also assign sounds to data as a way to make learning easier in school and to enrich the sensory impact of places like museums and aquariums.

And by giving voice to data, Walker’s research allows people with visual impairment to partake in experiences many of us take for granted."

- Bruce Walker:

-

"It’s not just that you were there at the eclipse but you were there with 10,000 other people, and the collective gasps and oohs and aahs. It’s not unlike watching a movie at home vs. watching a movie in a theater. Part of the experience is the fact that you are there with other people, so being able to share the visuals, being able to have a common understanding of what is going on, is crucial in order for you to be able to relate to what other people are experiencing. Unfortunately for people who are blind or visually impaired, they have obvious challenges of seeing the eclipse, seeing that it’s getting darker, seeing the cloud patterns, the fact that the sunlight when it’s filtered through the trees creates these fabulous patterns on the sidewalk. So as a result people who are blind or visually impaired are largely shut out, not only of the experience of the eclipse but of 10,000 other people sharing it. So our goal in that was to try to break down some of those barriers. [Music]"

- Renay San Miguel:

-

"Walker says some of these same technologies could help firefighters when they run into smoky building, or soldiers battling their way through a forest.

One final thought: Walker wants to make clear that it’s easy to focus on the technologies and applications of sonification, but at the end of the day, he and his lab researchers are trying to expand their understanding of people. How they interact with their world, which involves memory, perception, decision-making.

After all, someone has to hear these sounds, and act upon them.

My thanks to School of Psychology Professor Bruce Walker, director of the Georgia Tech Sonification Lab.

Siyan Zhou, a Ph.D student who composed our theme music, was a research associate in Walker’s Sonification Lab.

I’m Renay San Miguel, and you’ve been listening to Science Matters, the podcast of the Georgia Tech College of Sciences.

[Music]"